Abstract

PL2Map can simultaneously predict 3D coordinates of points and lines for high-accuracy camera re-localization.

Recent advancements in visual localization and mapping have demonstrated considerable success in integrating point and line features. However, expanding the localization framework to include additional mapping components frequently results in increased demand for memory and computational resources dedicated to matching tasks. In this study, we show how a lightweight neural network can learn to represent both 3D point and line features, and exhibit leading pose accuracy by harnessing the power of multiple learned mappings. Specifically, we utilize a single transformer block to encode line features, effectively transforming them into distinctive point-like descriptors. Subsequently, we treat these point and line descriptor sets as distinct yet interconnected feature sets. Through the integration of self- and cross-attention within several graph layers, our method effectively refines each feature before regressing 3D maps using two simple MLPs. In comprehensive experiments, our indoor localization findings surpass those of Hloc and Limap across both point-based and line-assisted configurations. Moreover, in outdoor scenarios, our method secures a significant lead, marking the most considerable enhancement over state-of-the-art learning-based methodologies.

PL2Map Pipeline Overview

Assume that we have a set of 2D keypoints $\{\mathbf{p}_{i}\}^{N}$ and a set of 2D line segments $\{\mathbf{l}_{i}\}^{M}$ extracted from image $\mathbf{I}^{r}$, each associated with visual descriptors $\{\mathbf{d}_{i}^{p}\}^{N}$ and $\{\mathbf{d}_{i}^{l}\}^{M}$ respectively. Here, $r$ denotes the image sourced from the reference database used to construct the 3D points and line map. We aim to develop a learning function $\mathcal{F}(.)$ that inputs the two sets of visual descriptors $\{\mathbf{d}_{i}^{p}\}^{N}$ and $\{\mathbf{d}_{i}^{l}\}^{M}$, and outputs the corresponding sets of 3D points $\{\mathbf{P}_{i}\in \mathbb{R}^{3}\}^{N}$ and lines $\{\mathbf{L}_{i} \in \mathbb{R}^{6}\}^{M}$ sets in the world coordinates system. The ultimate goal is to estimate a six degrees of freedom (6 DOF) camera pose $\mathbf{T} \in \mathbb{R}^{4\times4}$ for any new query image $\mathbf{I}$ from the same environment

Results on KingsCollege Cambridge

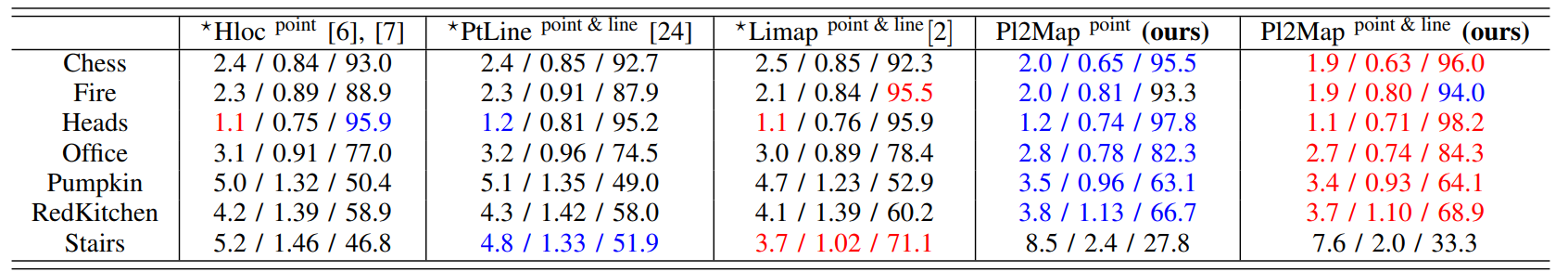

Localization Results on 7scenes Datasets

We report the median translation and rotation errors in cm and degrees and pose accuracy % at 5 cm / 5 deg. threshold of different relocalization methods using points and lines. The methods marked with $^{\star}$ are FM-based. The results in red are the best and blue indicates the second best.

Citation

@article{bui2024pl2map,

title={Representing 3D sparse map points and lines for camera relocalization},

author={Bui, Bach-Thuan and Bui, Huy-Hoang and Tran, Dinh-Tuan and Lee, Joo-Ho},

journal={arXiv preprint arXiv:2402.18011},

year={2024}

}